Walter Kriha

Copyright © 2001 Walter Kriha

Permission is granted to copy, distribute, and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts.

3 May 2001

This paper lives at http://www.kriha.org/. If you're reading it somewhere else, you may not have the latest version.

Written against the end of a larger project, this paper contains many ideas from my friends with whom I've been building this enterprise portal. I would like to say thanks to our project team

| Andreas Kapp |

| Andy Binggeli |

| Christophe Gevaudan |

| Guido Lombardini |

| Teresa Chen |

| Stephan Bielmann |

| Joachim Matheus |

| Gabriel Bucher |

| Heinz Dürr |

| Kaspar Thoman |

| Hans-Egon Hebel |

| Dmitri Fertman |

| Gabriela Dörig |

| Ralf Steck |

| Bernhard Scheffold |

| Yves Thommen |

| Rudi Flütsch |

| Dani Fischer |

| Reto Hirt |

| Christoph Schwizer |

and to our project leaders

| Christine Keller |

| Marcus Weber |

| David Bühler |

| Hans Feer |

| Ronny Burk |

Table of Contents

- Preface

- 1. Introduction

- 2. What is an Advanced Enterprise Portal?

- 3. Portal Conceptual Model

- 4. Reliability

- 5. Performance

- Caching

- The end-to-end dynamics of Web page retrievals

- Cache invalidation

- Information Architecture and Caching

- Client Side Caching

- Results and problems with client side caching

- Proxy Side Caching

- (Image) Caching and Encryption

- Server Side Caching: why, what, where and how much?

- Cache implementations

- Physical Architecture Problems: Clones and Cache Synchronization

- Portlets

- Fragments

- Pooling

- GUI design for speed

- Incremental page loading

- Information ordering

- The big table problem

- Throughput

- Asynchronous Processing

- Extreme Load Testing

- 6. Portal Architecture

- 7. Portal Maintainability

- 8. Content Management Integration

- 9. Heterogeneous and Distributed Searching

- 10. Mining the Web-House

- 11. Portal Extensions

- 12. Personalization

- 13. Resources

- A. About the paper

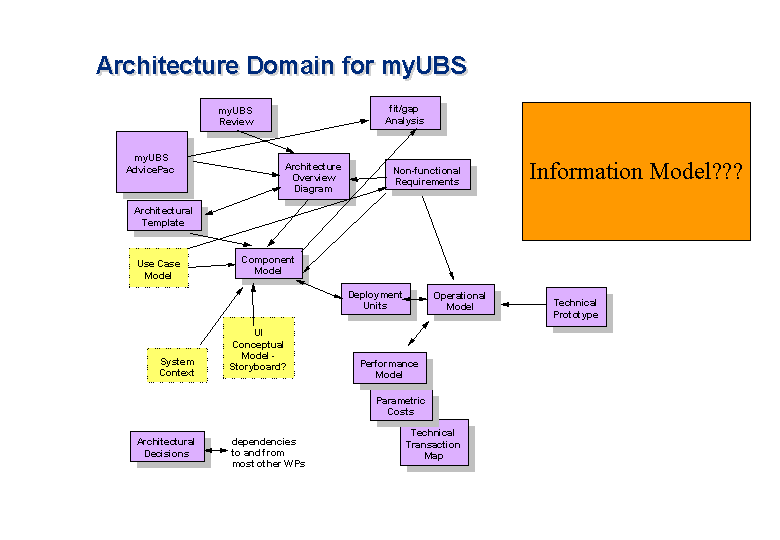

This document describes the specific problems of a large scale high-volume portal site (in this case a portal for a large international bank). By showing what is necessary to achieve a large scale high speed portal - both in architecture as well as implementation - it questions whether this effort is justified for all portal needs. It is not an introduction to Enterprise Portals. It assumes working knowledge of portals and associated technology.

If you're just getting started the resources chapter contains links to a lot of publicly available papers you might consider reading

Table of Contents

Just about a year ago in May 2000, AEPortal (Advanced Enterprise Portal) started as a typical web project: Put a wrapper around some existing services, retrieve some existing data sources and hide everything behind a common GUI. Clearly the functional requirements were the most important ones at that time.

Based on a rudimentary framework (similiar to an early version of STRUTS from apache.org), modeled roughly after the J2EE architecture the team started to write handlers, models and JSPs.

It looked like AEPortal would become an Enterprise Portal.

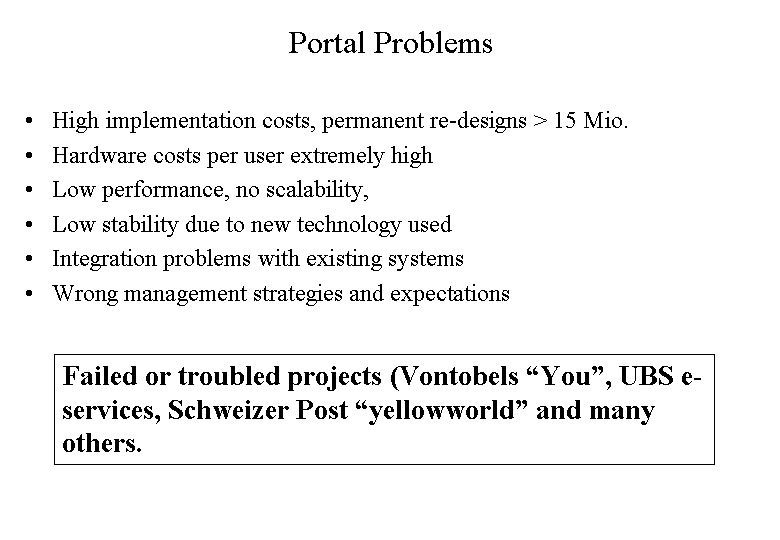

In the middle of October last year – when load tests and preparations for deployment started – the non-functional requirements made a sudden and violent appearance and they continue to dominate AEPortal till this very day.

Much has changed since then. AEPortal has been extended in many ways to cope with the non-functional requirements – going far beyond a regular web application. This document describes the portal specific aspects of AEPortal with a special focus on reliability, stability, performance and throughput.

At the end organizational problems that had a major effect on AEPortal are described as well.

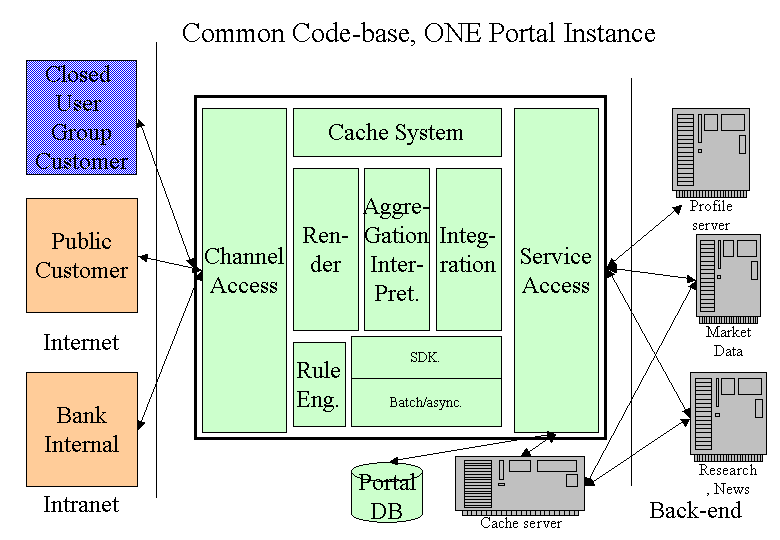

As an enterprise portal AEPortal goes beyond a simple web application architecture. It relies on internal multithreading, read-ahead and does a massive amount of caching to concentrate a large number of external services onto a personalized homepage - while still being responsive.

Being a portal AEPortal needs to combine transactional features (e.g. Telebanking) with publishing features (e.g. News). Right now it does a fairly good job on the transactional side but it is very weak with respect to publishing. This needs to change quickly and the document tries to highlight the problems and give hints on possible solutions.

This document also explains the technology used in AEPortal. It discusses critical aspects e.g. in case of external service failures. These topics are not covered in the Infrastructure Architecture Document. (24)

Caching plays a major role in AEPortal. Every site that delivers dynamic AND personalized content has a severe performance problem currently solved or mediated through large hardware investments.

Example 1.1. A little hardware at Charles Schwab

A recently published paper on the portal architecture at Charles Schwab shows that IBM delivered 500! Multiprocessor Unix stations to run the personalized portal. Of course the fact that the portal still runs CGA technology certainly accounts for a larger number of workstations - still - the numbers are frightening both financially and from a system management point of view

Another goal of this document is to collect ideas from within the AEPortal team or our friends. So please do not hesitate and add to it. There are still a lot of features missing that are vital for an enterprise scale portal (meta-information handling, search features, service development kit, maintainability in production etc.)

Table of Contents

Table of Contents

To get a better understanding of what really happens during a page request (and as an anti-dote for all the fancy sounding Java-isms) I found the following paragraph from Jon S. Stevens quite enlightening: (Please replace "Turbine" with "AEPortal" – same stuff here).

The current encouragement that Turbine gives to developers is to do a mapping between one Screen (Java) and one Template (WM or V). The way it works is that you build up a Context object that is essentially a Hashtable that contains all of the data that is required to render a particular template. Within the template, you refer to that data in order to format it for display. I will refer to this as the "Push MVC Model." This IMHO is a perfectly acceptable, easy to understand and implement approach.

We have solved the problem of being able to display the data on a template without requiring the engineer to make modifications to the Java code. In other words, you can modify the look and feel of the overall website without the requirement of having a Java engineer present to make the changes. This is a very good step forward. However, it has a shortcoming in that it makes it more difficult to allow the template designer (ie: a non programmer) the ability to move information on a template from one template to another template because it would require the logic in the Java code to be modified as well. For example, say you have a set of "wizard" type screens and you want to change the order of execution of those screens or even the order of the fields on those screens. In order to do so, you can't simply change the Next/Back links you need to also change the Java code.

So what does this mean for a JSP developer who wants to create a new view on some information?

1) Find out what handlers will create what pieces of information ("models" in AEPortal terms). Do this by looking at the handler(s) code.

2) If you need pieces of information from several handlers, have someone create a handler that calls all the needed handlers internally.

3) Go into the source code of the handler(s) and write down the keys used to store the pieces of information into the request hashtable.

4) Extract the information in the JSP.

This is a cumbersome and error-prone process and it requires programming skills at all levels. The "re-use" of handlers creates subtle dependencies. There is NO definition of page content or information.

The whole thing turned upside down would look like this:

"Instead of the developer telling the designer what Context names to use for each and every screen, there is instead a set of a few objects available for the template designer to pick and choose from. These objects will provide methods to access the underlying information either from the database or from the previously submitted form information."

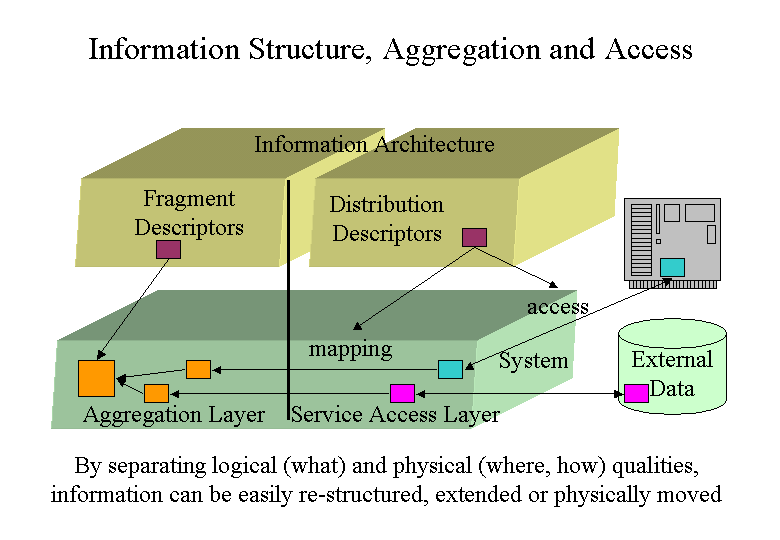

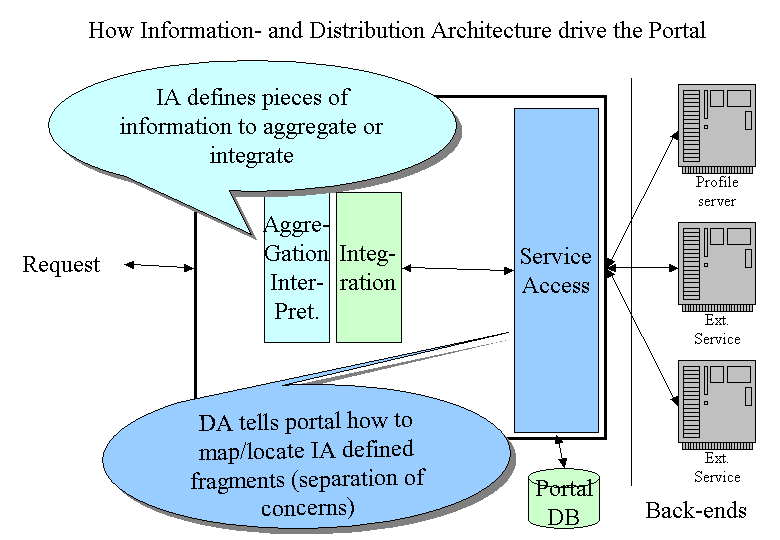

But this would require us to have a level beyond the (procedural) "handlers" where definitions of pages and page content, fragments and parts of information exist. This in turn would allow us to build editors even for dynamic and personalized pages.

But an even more important side-effect is that this architecture would support information fragments much better. And fragments are the basic building blocks for caches and allow the caching even of highly dynamic and personalized pages. This will be explained further down.

And last but not least: Andreas Kapp pointed me to another possibility: to not only cache those fragments but to make them persistent too – on a per user basis. This means that the personalization decisions are kind of frozen within a personal fragment and there is no need to always re-calculate the filters, selections etc. permanently during each request. Only the content that really changed needs to be filled in.

We will discuss the requirements of persistent, self-contained fragments in the chapter on fragments.

Table of Contents

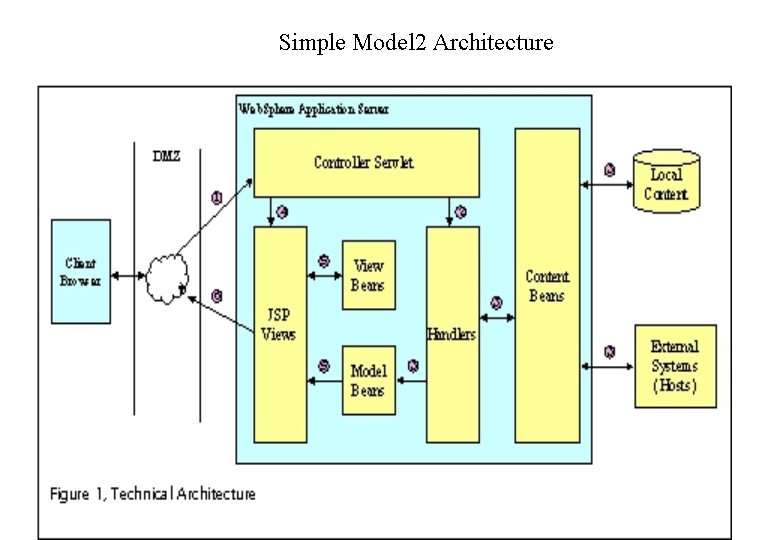

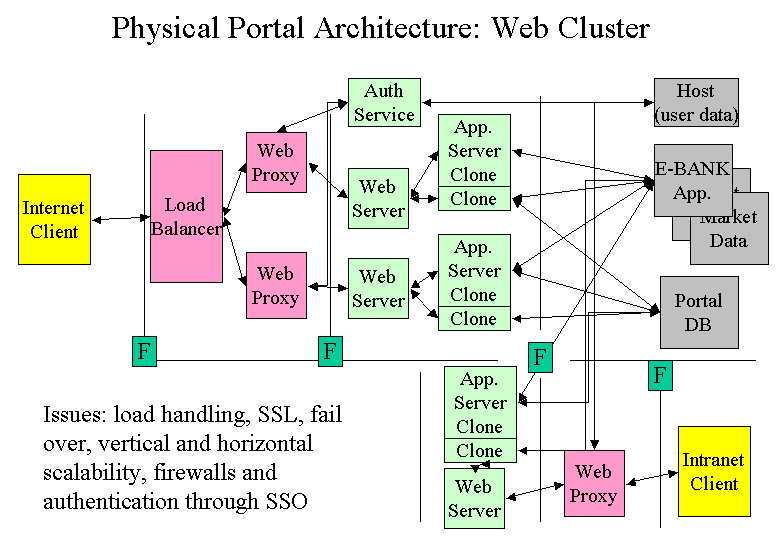

The diagram below – taken from the Infrastructure Overview - shows the typical flow of control for a simple page request in a J2EE Model 2 Architecture.

POST/GET. The request is submitted from the client browser and arrives at the Controller servlet. There is a single instance of the Controller servlet per web application. The Controller multi-threads, processing multiple client request concurrently. All requests for the web application arrive at the Controller.

Dispatch. The controller determines who the requesting user is, determines which page they are requesting, sets up the necessary context and invokes the correct Handler to process that request.

Create/Update. There is typically one Business Logic Handler per page. The handler is responsible for initiating the business operations requested by the user. Typically this will involve interaction with backend systems to retrieve or modify some persistent state. When the processing has completed, the Handler is responsible for creating or modifying State Data (the Model) held in the Web Application to represent the results. The Handler then completes and control passes back to the Controller.

Forward. The Controller will then determine which JSP to invoke to display the results. Normally the JSP is determined automatically based on the Requested page, however the Handler may have over-ridden the default if it wishes.

Extract. The role of the JSP is to render the page as HTML. When the JSP needs to display application data it extracts it directly from the State Data (Model) that has previously been setup by the Handler.

Respond. When the JSP has finished rendering the page it is returned to the client browser for display.§

Therefore, each page to be delivered to the client typically involves writing a triplet comprising: a Handler to process the Business Logic; a Model to hold the result data; a JSP to display the results back to the client.

AEPortal uses the JADE infrastructure to support the above process.

The processing of a simple page request has the following RAS properties:

During the request the AEPortal database is contacted (e.g. for profile information) and optionally an external service is accessed (e.g. to load a research document from a web-server

Processing is sequential and response-time constrained: the handler cannot use wait times (e.g. waiting for network responses) for other tasks

If the handler is blocked, the whole request coming from the web container is blocked too. If the maximum number of open connections is reached, no new request can enter AEPortal WHILE the handler(s) are busy.

No timeouts are specified for a handler. If timeouts happen they do so within the external service access API.

There is currently no external service access API that would offer a Quality-of-Service interface e.g. to set timeouts or inquire the status of a service.

Let’s assume that an external service becomes unavailable. Eventually a handler waiting for this resource will get a timeout in the access API of that service and return with an error. This may take x seconds to become effective. (We need to know more about timeouts in our access APIs).

While waiting for the external resource a handler will hold on to some system resources but at the same time block an entry into the system. The effects on the system resources should be benign.

The user will have to wait until the timeout happens and the request returns. A different user with a request to a different resource will not be affected but a user going after the same resource will see the same delay while waiting for a timeout.

It would be an improvement for both system and user if we could tag a service as being unavailable and not start any new requests against this service. But this raises a couple of questions:

For now we need to make sure that the timeouts hidden in our access API’s are short enough to avoid blocking too many requests too long.

Debug has shown some requests waiting 4 minutes or more e.g. on Quotes. This a severe system drain and a bad user experience.

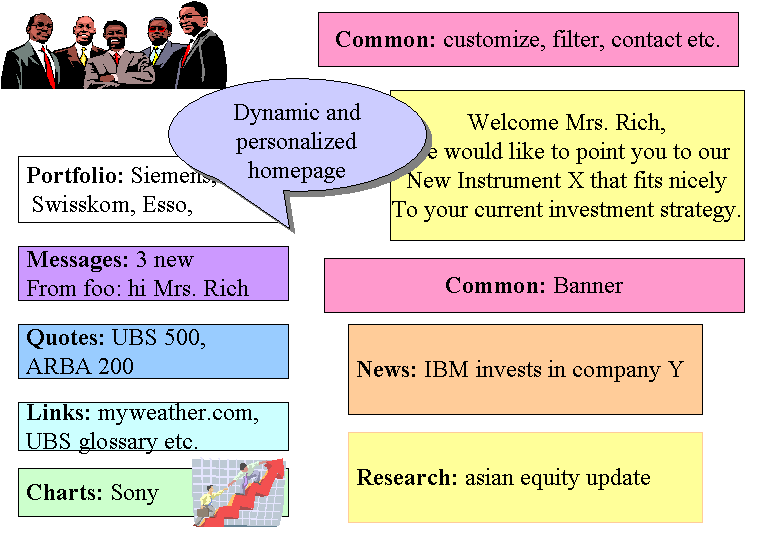

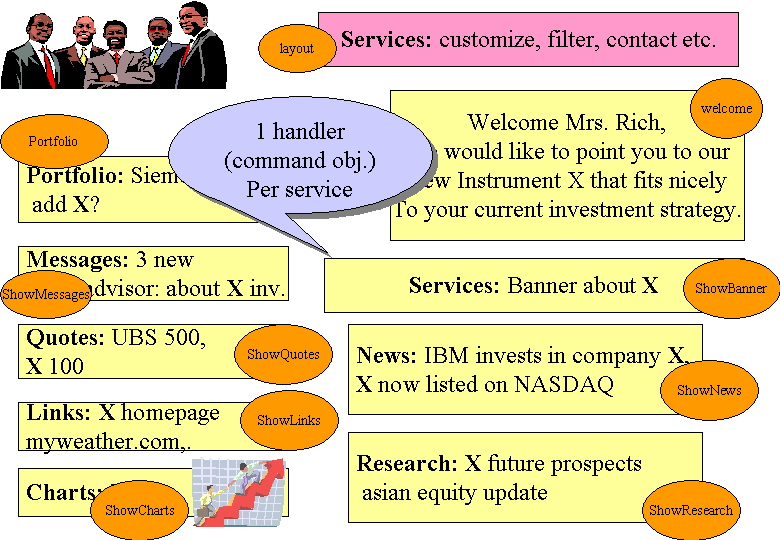

The homepage of AEPortal is called a "Multi-Page" because it combines several otherwise independent services on one page. Initially the internal processing of the homepage happened in the same way as for any other page: a handler running on a server thread (i.e. the thread that comes from the web container) collects the information and forwards the results to the view (via the controller).

It soon turned out that the AEPortal homepage had special requirements that were not easily met by the standard page processing:

Several external services needed to be contacted for one homepage request

Every additional homepage service added to the overall request time (= the sum of all individual processing times)

Rendering could not start until all services had finished. Frames were not allowed and therefore no partial rendering possible. Users did see nothing while waiting for the whole page to complete

In case of a service failure (e.g. news) not only individual page requests for news would block – almost every homepage would be affected too because news are a part of many personal homepage configurations

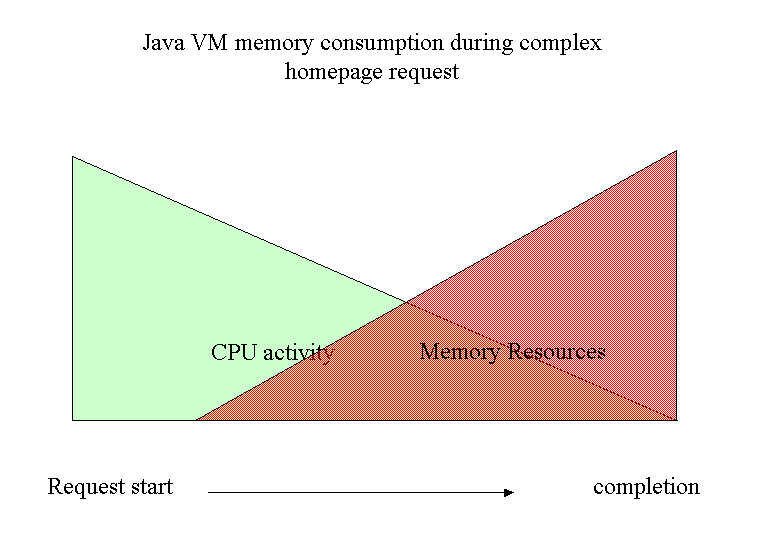

A simple page request blocking holds on to a small number of system resources. A homepage request blocking would hold on to a much larger number of system resources – potentially causing large drops in available memory. (Something we have observed during Garbage Collection debug)

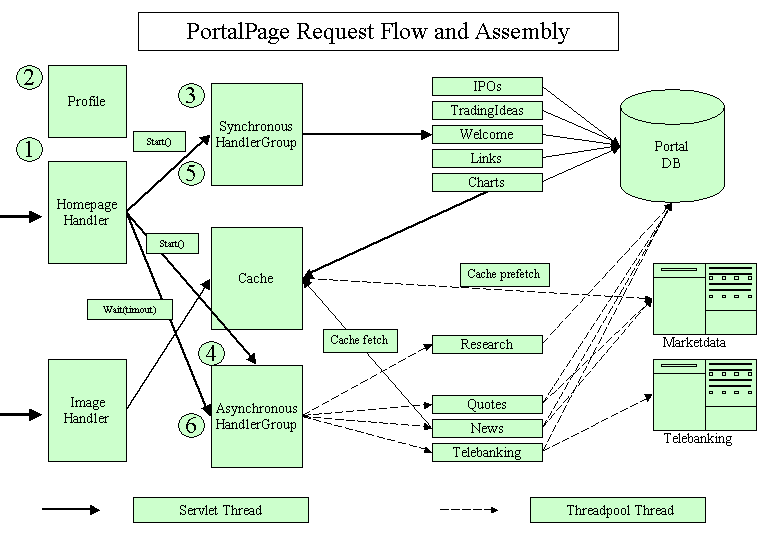

While processing of multi-pages would have to be somewhat different there was a necessity to re-use the existing handlers and handler architecture because of tight deadlines. This lead to the following architecture:

The processing steps 1 – 3 from above apply here as well. The homepage handler is a regular handler like any other.

Using the servlet thread, the controller forwards the incoming request to the homepage Handler.

Homepage handler reads profile information to select which services of the homepage need to run for this user (access rights are of course respected as well)

Every service has a page description in ControllerConfig.xml telling the system about the necessary access token, handler names and last but not least which data sources the service will use. Depending on the data sources (available are: AEPortalDB, OTHERDB, HTTP) the handler for this service will be put in one of two HandlerGroup objects: Handlers using only the AEPortalDB are put in the synchronous handler group. Handlers using HTTP sources end up in the asynchronous handler group.

Homepage handler calls start() on the asynchronous group first. It iterates over all available handlers and starts every one with a thread from the AEPortal threadpool.

The behavior of the threadpool needs to be checked. Will every new request be put into a large queue or will the requester block on adding to the queue if all threads are busy? This will affect system behavior on a loaded system.

Then homepage handler calls start() on the synchronous group. Again it iterates over all available handlers but uses its own thread (which is the servlet thread) to execute the handlers sequentially.

After executing all handlers in the synchronous group homepage handler calls wait(timeout) on the asynchronous group. The timeout is configurable (currently 30 seconds). When all handlers are finished OR the timeout has happened, homepage handler returns back to Controller.

The assumptions behind these two groups are as follows:

handlers in the synchronous group are both fast and reliable (because they only talk to the AEPortal database). They could run within their own threads as well but the overhead for the increased thread context switches are not worth the effort. We see execution times of less than one second for the whole synchronous group.

The reasons for handlers to go into the asynchronous group are more diverse. The first and obvious reason is that the handlers experience large waiting times on I/O because they access e.g. other web servers. By starting a request as soon as possible we can effectively run several in parallel. Doing so we avoid the overall homepage request time to be the sum of all single handler times. Tests have shown significant savings here. To achieve this effect the wait() method would not need a parameter timeout.

The timeout parameter is available because external services not only cause I/O waiting times but are also sometimes unreliable too. The assumption was that a full homepage request should not have to wait for a single handler simply because its associated external service is very slow or even unavailable. While this is a valid requirement the consequences of the timeout parameter are much more complicated than initially thought. This will be discussed below.

(Research, Telebanking and Charts are currently special cases. Research does NOT use HTTP access for the homepage part but is currently fairly slow and is therefore in the asynchronous group. This will change soon and it will move to the synchronous group. Telebanking is different in development and production and it is currently not clear how fast and reliable access to its external services will be in production. This is why it is in the asynchronous group even though its runtime in development is only around 50 ms. The charts part of the homepage is worth an extra paragraph below.

Charts looks like a service that would need an external service (External Data System) for its pictures – and it does so, just not within the charts homepage request.

The homepage request of charts uses the asynchronous requester capabilities of the AEPortal cache and simply requests certain pictures to be downloaded from External Data System (if not yet in the cache).

Charts Handler schedules the request synchronously but the request itself runs in its own thread in the background, taking a thread from the AEPortal threadpool.

The homepage only contains the URLs of the requested images. During rendering of the homepage the browser will request the images asynchronously by going through the AEPortal image handler.

The fact that charts causes a background thread to run is important in case of external system failure: what happens if the charts external service is down? Or under maintenance? Currently the background thread that is actually trying to download the image will get a timeout after a while and finish. In the meantime an image request from the browser will wait on the cache without a timeout. What happens in case of a failure? Will there be a null object in the cache? Anyway – the image request blocking without timeout is not such a big problem because it runs on a servlet thread and therefore blocks an entry channel into AEPortal at the same time – no danger of AEPortal getting overrun.

The background thread of the asynchronous requester is more dangerous because a failure in an external service can lead to all threads of the threadpool being allocated for the cache loader.

Threads should NOT die and if they do the threadpool needs to generate a new one.

Failure behavior needs to cover maintenance periods of external services too, especially the single point of failure we got through MADIS (news, quotes and charts all rely on MADIS)

Note: The image cache cannot get too large! Should the images be cached in the file system instead?

The processing of a multi-page request has the following RAS properties:

Additional threads are used. Handler uses server thread to start the threads. At least one thread must be available (possibly after waiting for it). A homepage request cannot work with a threadpool that does not have any threads and it cannot use the server thread instead (which would be equivalent to putting all necessary handlers into the synchronous group). There are no means to check for available threads in the threadpool.

The size of the threadpool is still an open question. Testing has shown that an average homepage request uses between two to four threads but his was before the introduction of the separate handler groups. In addition to this the asynchronous requester capability of the cache (see below) will need threads too.

It is still an open question whether the threadpool should have an upper limit. One of the problems associated with no upper limit is that currently the threadpool does not shrink – it only grows till the max. value is reached. The question of upper limits is much more difficult to answer for the case of timeouts being used (see below)

During the request the AEPortal database is contacted many times (e.g. for profile information) and parallel to this external services are accessed. There is no common transaction context between the handlers.

Processing is sequential as well as parallel: The best case would be if the runtime of the synchronous block is equal to the runtime of the asynchronous handler group (which is started first). Testing has shown that in many cases the external services are slower (by a factor of 2 – 5)

A single slow service leads to a considerable system load since the other handlers have already allocated a lot of memory.

If one of the handlers involved blocks the whole request coming from the web container is blocked. If the maximum number of open connections is reached, no new request can enter AEPortal WHILE the handler(s) are busy. This is also the case if one of the handlers in the asynchronous group blocks because the homepage handler itself will wait for the whole group to finish (in case of no timeout set)

No timeouts are specified for a handler. If timeouts happen they do so within the external service access API.

There is currently no external service access API that would offer a Quality-of-Service interface e.g. to set timeouts or inquire the status of a service.

The current policy for error messages is as follows: A failure in a service API should not make the JSP crash because of null pointers. The JSP on the other hand cannot create a specific and useful error message because it is not informed about service API problems. This is certainly something that needs to be fixed in a re-design.

Again, just like the in the case of a simple page request, let’s assume that an external service becomes unavailable. Eventually a handler waiting for this resource will get a timeout in the access API of that service and return with an error. This may take x seconds to become effective. (We need to know more about timeouts in our access APIs).

While waiting for the external resource ALL HOMEPAGE HANDLERS that belong to one request will hold on to some system resources. And so will ALL HOMEPAGE REQUESTS do which include this special handler. On top of that - three of the homepage services go to ONE external service (MADIS) and all external services are subject to change (hopefully with an early enough warning to AEPortal). This means that a blocked homepage request has a much bigger impact on system resources than a simple page request. Actually, without the ability to close down a specific service, any interface change in an external service could bring AEPortal down easily.

And since the homepage is at the same time the most important als well as the first service after login, a blocking service from the homepage is a critical condition that can quickly drain the system of its memory resources.

The good news: The request blocks an entry into the system (servlet engine) and prevents overrun.

The effects on the user are also different compared to a simple page request: If a simple request hangs the user can always go back to the homepage and chose a different service. This is not possible if the homepage hangs. We do not offer horizontal navigation yet (going from one service directly to any other service). That means that with a blocking homepage a user gets NOTHING. From an acceptance point of view a quick and reliable homepage is also a must.

The dire effects of a failure in a homepage service have led to the introduction of a timeout for waits on the asynchronous service group. The requirements and consequences of a timeout will be discussed next.

After the things said above it should be clear that the external services make AEPortal very vulnerable, especially the most important portal page. Before we dive into the implementation of timeouts a speciality of the Infrastructure architecture needs to be explained: the relation between handler, model and jsp.

Note: the "models" should really be result objects. The JSP should be a fairly simple render mechanism that can ALWAYS rely on result objects to be present – even in case of service API errors. Right now our JSPs are overloaded with error checking code.

Handlers create model objects. If a handler experiences a problem it can store an exception inside a model object and make it accessible for the jsp. But what happens during a multi-page request? The first thing to notice is that the Homepage jsp cannot expect to find a model object for every possible homepage handler. The user may not have the rights for a certain service or has perhaps de-configured it. In these cases the handlers do not run and therefore do not create model objects.

The introduction of a timeout while waiting for asynchronous handlers offers another chance for "no model". A handler blocks on an external service but the homepage handlers times out and returns to the controller and finally to the jsp. The handler did not create or store a model object yet. The only way around this problem is that the homepage handler gathers statistics about the handlers from the handlergroup and stores it in the Homepage-model. Now the jsp can learn about which handlers returned successfully and which ones didn’t.

The implementation of a timeout is simple: the homepage handler calls waitForAll(timeout) on the handler group and returns either because all handlers of the group signaled completion to the group or because of the timeout. The consequences are much more difficult. What happens to the handler that did not return on time? The answer is simple: Nothing. It is very important to understand that the thread running the handler is not killed. Killing a thread in Java is deprecated and for a good reason too. When a thread is killed all locks held by this thread are immediately released and if the thread was just doing a critical operation on some objects state, the object might be left in an inconsistent state.

This has an interesting effect on system resources: The homepage handler will return and after rendering the request will return back to the servlet engine and by doing so free an input connection into the engine – WHILE THERE IS STILL A THREAD RUNNING ON BEHALF OF THIS REQUEST WITHIN AEPortal. A new request can enter the system immediately, will probably hit the same problem in the handler that timed out in the previous request and return – again leaving a thread running within AEPortal. Of course, these threads will eventually time out on the service API and return to the pool but on a busy system it could easily happen that we run out of threads for new homepage requests (or even asynchronous requests on the cache)

Does it help to leave the upper bound of the threadpool open? Not really since requests could come in so fast that we would exhaust any reasonable number of threads. And remember – we can’t shrink this number afterwards.

Note: we have discussed an alternative: Wouldn’t it be better to crash the VM through an exploding threadpool? In this case the websphere system management would re-start the application server!

If we could prevent the new request from running the problematic handler we could avoid losing another thread. But this would require the functionality to disable and enable services.

Without being able to disable and enable services automatically (basically a problem detection algorithm) a timeout does not really make sense. It is even dangerous if set too low.

The handler threading mechanism was introduced at a time when many handlers used little or no caching at all. It could be expected that every handler in the asynchronous group would have to go out on the network and request data.

The membership in the asynchronous group therefore became a property of the handler – tagged onto the page description. While this is easily changed by a change to the configuration file it still presents a problem in case of advanced caching: If the data the handler has to collect is already in the cache, the handler will return almost immediately. This is a waste of computing resources because the overhead of thread scheduling is bigger than the time spent in the handler to return the CACHED data.

The homepage handler on the other side could not know in advance if e.g. the news handler will find the requested data in the cache or not. It needs to start the news handler in its own thread (per configuration) even if the news handler will run only 10 ms.

Why doesn’t the homepage Handler know which data the news handler will retrieve? Because there is no description of those data available! The only instances that know about certain model objects are handlers and their views. This makes caching and scheduling much harder.

What looks like a little nuisance hides a much bigger design flaw in the portal architecture: The architecture is procedure/request/transaction driven and not data/publishing driven:

Without running a handler no results ("models") come to exist

Only the code within a handler knows what data ("models") will be created and where they will stored (and how they will be called)

A page does not have a definition of its content – quite the opposite is true: a handler defines implicitly what a page really is. Example: the content of the homepage is defined as the set of handlers that need to run to create certain data ("models")

Handlers need to deal with caching issues directly and internally. No intermediate layer could deal with cached data because nobody besides each specific handler knows which data should/could be cached

The framework maps GET and POST request into one request type – negating the different semantics of both request types in http. There seem to be no rules within our team with respect to the use of GET or POST.

We have already seen some of the consequences of this approach with respect to caching and scheduling. But think about using the AEPortal engine behind a new access channel or just AEPortal light. Whoever wants to extract information through AEPortal needs to know about handlers and their internals (models). Instead of knowing about data and data fragments, clients need to call handlers. Again, this architecture is good for transactional purposes but it is disastrous for publishing purposes.

A few hints on a data-driven alternative:

The system retrieves the homepage description containing links to fragments.

The systems tries to retrieve the fragments from the caching layer, with the user context as a parameter

If the cache contains the item in the version needed (we have personalized data!) it is returned immediately.

If we have a cache miss, the proper requester turns around and gets a handler to retrieve the data (synchronously or asynchronously)

The items returned are assembled and forwarded to the proper view.

This means for clients that they do not know about system internals. All they need to know is the INFORMATION they want – not which handlers they have to call to retrieve an information set that hopefully contains all the data they need.

We will discuss this some more in the chapter on caching, when we meet the problem of document fragments.

For more information about this have a look at:

http://www.apache.org/turbine/pullmodel.html

Its focus is on GUI flexibility – UI designers cannot change the GUI without also changing the Java based handlers that create the result objects - but the reasoning also applies to caching and fragments.

The current handler design did not force developers to separate different use-cases into different handlers. A typical example is the authentication handler providing the rendering on behalf of the authentication front-end. Different requests all end up in one handler – only distinguished by different parameters. This has the following problems associated:

The fragment based architecture described below needs to separate the different requests into clearly distinguishable fragments.

The handlers need a more generic design allowing the configuration of e.g. a fragment handler by specifying the service needed etc. This is e.g. done in the Portlet approach (Apache Jetspeed)

The issue of multiple multi-pages or homepages is caused by the simple fact that our current homepage cannot grow endlessly – we could not delivery all those fragments in a reasonable time. Not to mention that the whole page might become very confusing.

Technically the problem is not very hard to solve:

the homepage handler needs to be generalized into a multi-page handler. It looks at the requested page name from RequestContext and retrieves the proper page description

HandlerGroup and derived synchronous and asynchronous handler groups would need to be generalized a bit.

The page descriptions in the ControllerConfig.xml file need to be extended to allow page links within page elements (in case a service appears in several multi-pages

A navigation scheme between the multi-pages would be necessary. But this would be the same as for horizontal navigation needed anyway.

Some rules need to control the number and selection of services per multi-page

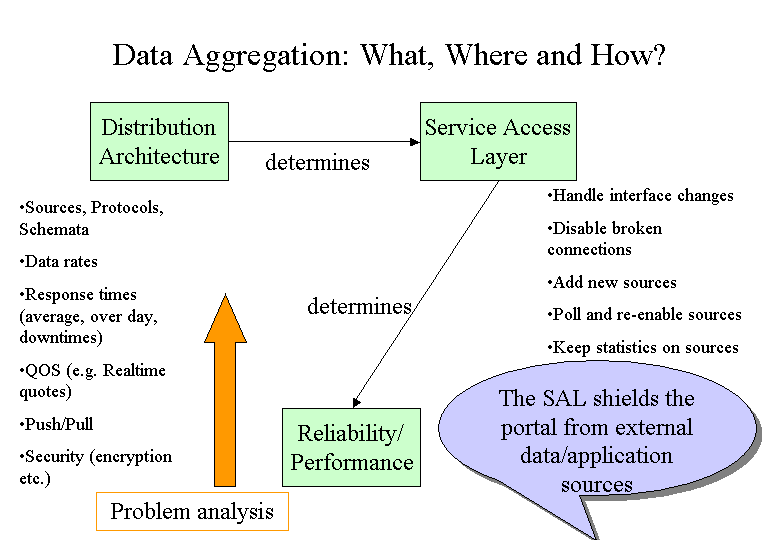

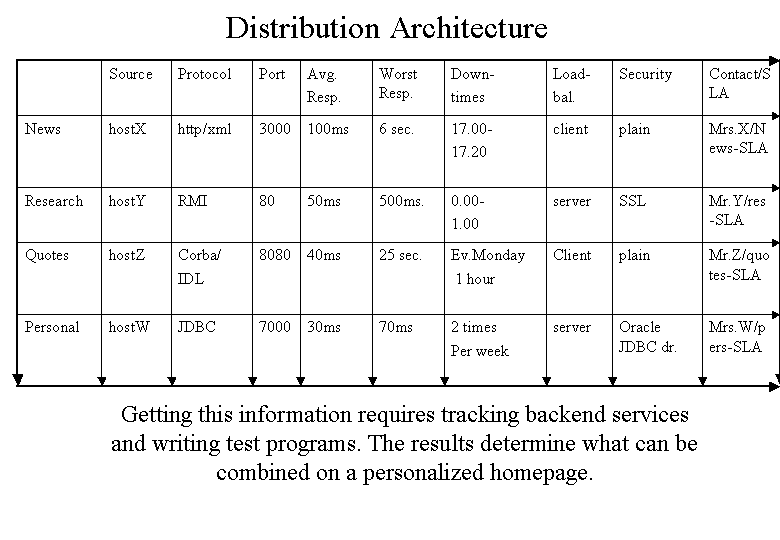

The way external services were accessed proved to be the most influential factor for system reliability. What was missing was a service API through which all access had to go and which would be a major point for monitoring those external services. The chapter on portal architecture will show what is needed.

Table of Contents

- Caching

- The end-to-end dynamics of Web page retrievals

- Cache invalidation

- Information Architecture and Caching

- Client Side Caching

- Results and problems with client side caching

- Proxy Side Caching

- (Image) Caching and Encryption

- Server Side Caching: why, what, where and how much?

- Cache implementations

- Physical Architecture Problems: Clones and Cache Synchronization

- Portlets

- Fragments

- Pooling

- GUI design for speed

- Incremental page loading

- Information ordering

- The big table problem

- Throughput

- Asynchronous Processing

- Extreme Load Testing

A large-scale web application needs to apply an end-to-end view on how pages are created and served: client side and server side.

This covers issues like dynamic page creation, the structure of pages, compression and load-balancing issues and goes from http headers settings over colors and page structure right down into application architecture.

We will talk about the current settings for client side caching in our infrastructure shortly and then move on to server side caching.

The biggest problem in caching dynamic and personalized data is cache invalidation. Client side browser caches as well as intermediate proxy caches cannot be forced to invalidate an entry ON DEMAND. These caches either cache not at all or for a certain time only. The newer HTTP1.1 protocol also allows them to re-validate a fragment by going to the server – driven by cache-control settings for the page.

The result is that cache-control settings for browser and proxy caches need to be conservative.

Server side caches on the other side MUST HAVE AN INVALIDATION INTERFACE and possibly also validator objects that decide about when and if a certain fragment needs to be invalidated.

AEPortal being a personalized service our initial approach to client side caching was to simply turn it off completely.

private static String sExpiresValue = "0";

private static String sCacheControlValue = "no-cache, no-store, max-age=0, s-maxage=0, must-revalidate, proxy-revalidate"; // HTTP 1.1: do not cache nor store on proxy server. AKP

private static String sPragmaValue = "no-cache";

These values are currently set at the beginning of the service method of our controller servlet. They are the same for all pages. It would not be hard to make them page specific – driven by a tag in our ControllerConfig.xml. That’s what e.g. the struts package from Apache.org wants to do in the next release.

We do not use a "validator", e.g. LAST_MODIFIED which means that clients will not ask us to validate a request. Instead they will always pull down a fresh page.

We also do not use the servlet method getLastModified() which has the following use case:

It's a standard method from HttpServlet that a servlet can implement to return when its content last changed. Servers traditionally use this information to support "Conditional GET" requests that maximize the usefulness of browser caches. When a client requests a page they've seen before and have in the browser cache, the server can check the servlet's last modified time and (if the page hasn't changed from the version in the browser cache) the server can return an SC_NOT_MODIFIED response instead of sending the page again. See Chapter 3 of "Java Servlet Programming" for a detailed description of this process.

Jason Hunter, http://www.servlets.com/soapbox/freecache.html

A simple example that a personalized homepage need not exclude the use of client side caching:

If the decision to use the cached homepage can be based purely on the age of the homepage (e.g. 30 secs.) the getLastModified() would simply compare the creation time of the homepage (stored in session?) with the current time.

This would help in all those re-size cases (Netscape). It would also decrease system load during navigation (we don’t have a horizontal navigation yet).

Please note: We are talking the full, personalized homepage here. Further down in "client side caching" we will also take the homepage apart – following an idea of Markus-A.Meier.

First the controller servlet was changed to set the EXPIRES header and the MAX_AGE cache control value both to 20 seconds default per page. To enable the getLastModified() mechanism a validator (in our case LAST_MODIFIED) was set to the current time when a page was created. And getLastModified() returned currenttime-20000 by default. No explicit invalidation of pages was done.

Note: the controller servlet was modified in several ways:

It now implements the service method, overriding the one inherited from HttpServlet. I noticed that this is the method that seems to call getLastModified() – allowing us to distinguish the case where getLastModified() is called to set the modification time vs. it being called to test for expiration (see J.Hunter)

It is unclear if overriding the service() method is actually a no-no.

The servlet now also implements the destroy() method – even if it only logs an error message because right now we are not able to re-start the application (servlet) without a re-start of the application server. This is because we use static singletons. The destroy method should at least close the threadpool and the reference data manager.

It is unclear under which circumstances the websphere container would really call destroy(). Could our servlet and container experts please comment on this?

We did not set the MUST_VALIDATE header yet but some pages would probably benefit from doing so.

Problems:

the expiration time need not only depend on the page. Different users could possibly have a different QOS agreement for the same pages. Real-time quotes are a typical example. Either we use different pages for those customers (could force us to use many different pages) or we can specify an array of expiration times per page

After changing the homepage layout (myprofile), a stale homepage containing the old services was served once to the user. We need to use MUST-VALIDATE and a better handling of the getLastModified() method.

We don’t know if business will authorize an expiration time of 20 seconds for every service.

We don’t know how clients will use our site. (Our user interface and usability specialist Andy Binggeli has long since requested user acceptance tests) and therefore we must guess usage patterns, e.g. navigational patterns

Results:

Three out of four homepage requests for one user came from the local browser cache

Navigation between the homepage and single services was much quicker

Browsers treat the "re-load" button differently, e.g. Opera requests an uncached page when the re-load button is hit. Netscape needs a "shift + re-load" for this.

Javascript files seem to get no caching, at least within our test-environment. This would mean a major performance hit as some of them are around 60k big.

While client side caching will not affect our load tests (e.g. login, homepage, single-service, logout) regular work with AEPortal would benefit a lot.

A possible extension of the page element that covers the content lifecycle could be like this:

Example 5.1. Lifecycle definitions for cachable information objects

<!ELEMENT page (...,lifecycle ?,..) > < !ATTLIST page -- refer to a named lifecycle instance by idref (optional)-- lifeCycleRef idref #implied > < !ELEMENT lifecycle (#empty) > <!ATTLIST lifecycle -- allows to refer to a certain lifecycle definition name ID #implied -- after x milliseconds the user agent should invalidate the page. The system will assume a reasonable default if none given. A zero will tell the user agent to NOT cache at all -- expires CDATA #implied -- the user agent should ask server after expiration time -- askAfterExpiration (yes|no) yes -- the user agent should ALWAYS ask for validation -- askAlways (true|false) false -- the system will ask the given validator for validation during a getLastModified() request OR when a validator (LAST_MODIFIED or ETAG needs to be created. Allows pages to specialize this -- validator CDATA #implied > -- experimental: what to do in case the backend is down: -- useCachedOnError (y|n) n >

Note

The lifecycle element is an architectural element. It is intended to be used in different contexts e.g. pages, page fragments etc. Therefore a lifecycle instance can have a name that serves as an ID. Users of this instance can simply refer to it and "inherit" its values.

This does not prevent users from specifying their own lifecycle instance and STILL refer to another one. In this case the users own instance will override the one that was referred to.

AEPortal includes a number of static images that should be served from the reverse proxies. The same is true of our Javascript files.

Note: who in production will take care of that?

Further caching of information is a tricky topic because the information might be personalized. We do not allow proxy side caching right now. But for some information it might be OK to use the public cache-control header.

This chapter obviously needs a more careful treatment.

"Encryption consumes significant CPU cycles and should only be used for confidential information; many Web sites use encryption for nonessential information such as all the image files included in a Web page" (23).

Is there a way to exclude images from encryption within an SSL session?

Can we cache encrypted objects (e.g. charts images, navigation buttons, small gifs, navigation bars, logos etc.)? At least an expiration time and or validator would be necessary. What about Java Script?

BTW: I don’t think that the image handler (who writes the images directly to the servlet output stream) does set any cache-control values that would allow client and/or proxy side caching.

BTW: how does socket-keep-alive work?

Which services (fragments) really need to be encrypted?

AEPortal currently uses a caching infrastructure that allows various QOS, e.g. asynchronous requests. This infrastructure should be used for domain object caching needs. It can be found in the package comaepinfrastructure.caching. For caching in other layers the following chapters suggest some other techniques too.

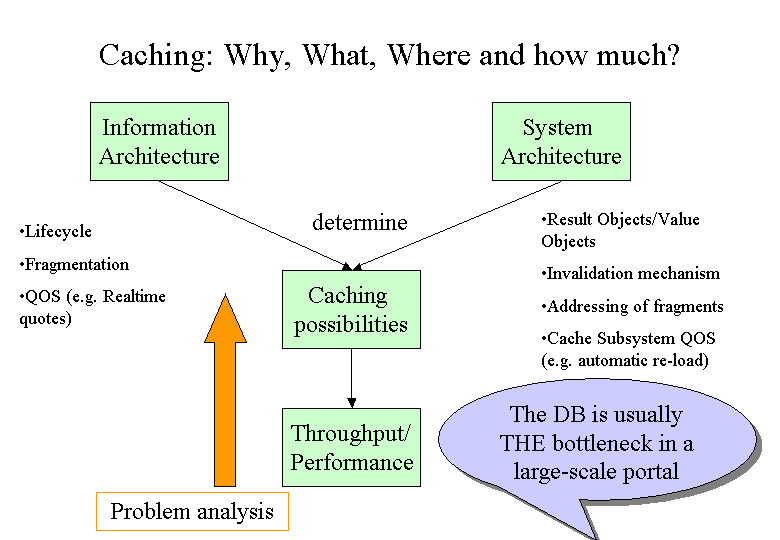

The reason for caching is quite simple: Throughput (and in some cases availability). In some cases – especially when dealing with personalized information - caching will speed up a single new but the effect may not be considered worth the effort – e.g. because the single user case is already fast enough. But on a large-scale site caching will allow us to serve a much larger number of requests concurrently.

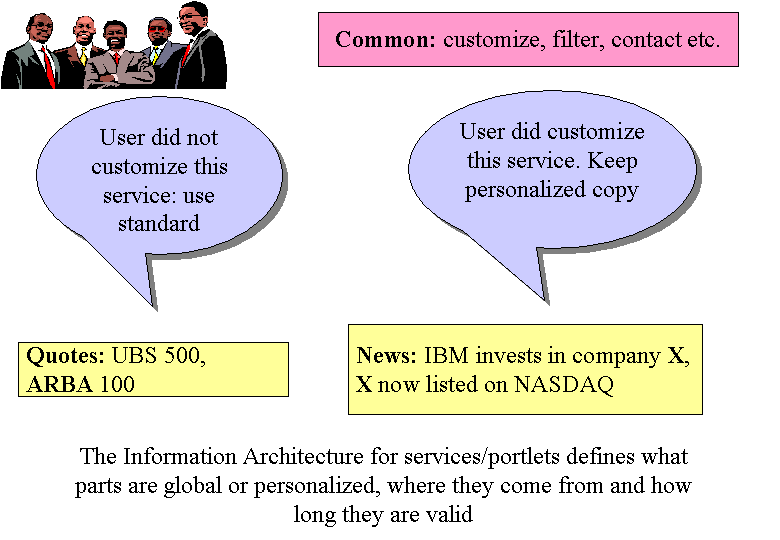

This is the reason why in many projects caching gets introduced at a late stage (once the throughput problems are obvious). And it takes some arguing to convince everybody about its importance because it does not speed up a single personalized request as long as there is no clear distinction between global pieces, individual selections of global pieces and really individual pieces like e.g. a greeting.

Note: The possibilities for caching are restricted by the application architecture. Caching requires a decomposition of the information space along the dimensions time and personalization

The results from our load-tests are pretty clear: homepage requests are expensive. They allocate a lot of resources and suffer from expensive and unreliable access of external services. And last but not least we would like to avoid DOS attacks caused by simply pressing the re-load button of the browser.

Sometimes caching can also improve availability e.g. if a backend service is temporarily unavailable the system can still use cached data. This depends of course on the quality of the data and excludes things like quotes. The opensymphony oscache module provides a tag library that includes such a feature:

<cache:cache <% try Inside try block. <% // do regular processing here <% catch (Exception e) > // in case of a problem, use the cached version <cache:usecached /> <% > </cache:cache> see Resources, Opensymphony

Our original thinking here was that most of our content is NOT cacheable because it is dynamic. A closer inspection of our content revealed that a lot of it would actually be cacheable but this chance has either been neglected or even prohibited by architectural problems.

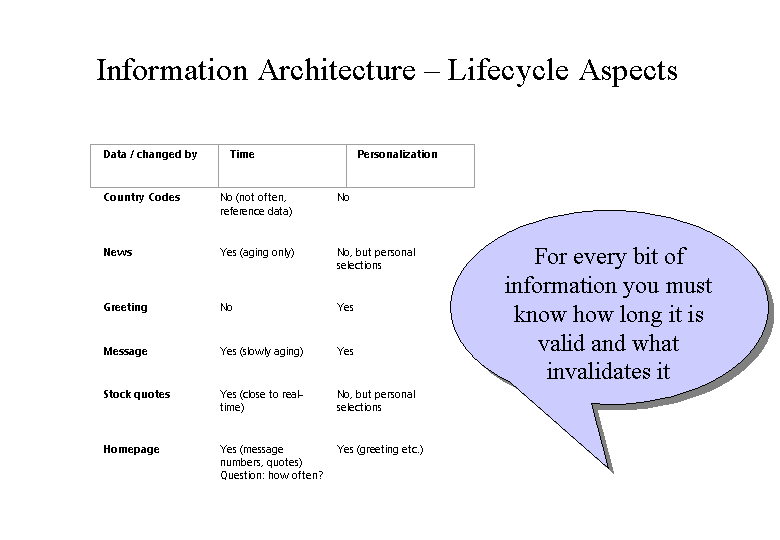

Let’s look at some types of information and their behavior in case of caching: The difficulties for caching algorithms increase from upper left to lower right.

Data / changed by | Time | Personalization |

Country Codes | No (not often, reference data) | No |

News | Yes (aging only) | No, but personal selections |

Greeting | No | Yes |

Message | Yes (slowly aging) | Yes |

Stock quotes | Yes (close to real-time) | No, but personal selections |

Homepage | Yes (message numbers, quotes) Question: how often? | Yes (greeting etc.) |

Country codes are reference data. They rarely change. In AEPortal there is a separate caching mechanism (described below) that deals with reference data only.

All other kinds of data are either changed by time or through personalization and require a different handling. The next best thing to reference data are data that change through time but are at least GLOBAL. Examples are news and quotes which should differ by person (This does not mean that everybody will get the same news)

A greeting (welcome message) does not change at all during a session but is highly personalized. This reduces the impact of caching but does not make it unnecessary for a large site. Reading the same message on every re-load from the DB does not cost a lot but with hundreds of users it is unnecessary overhead.

The homepage is a pretty difficult case. Our initial approach was to not use caching at all because the page was considered highly personalized and also contained near real-time data (quotes)

This was a mistake for the following reasons:

Page reloads forced by navigation or browser re-size would cause a complete rebuild of the homepage

According to a report from the yahoo-team (communications of the ACM, topic personalization) 80 % of all users do NOT customize their homepage. This would mean that besides the personal greeting everything else would be standard on the homepage

Even a very short delay for quotes data would save a lot of roundtrips to the backend service MADIS. Right now we are going for EVERY STANDARD quotes request (i.e. the user did not specify a personal quotes list) to the backend!

The homepage could be cached in parts too (see below: partial caching)

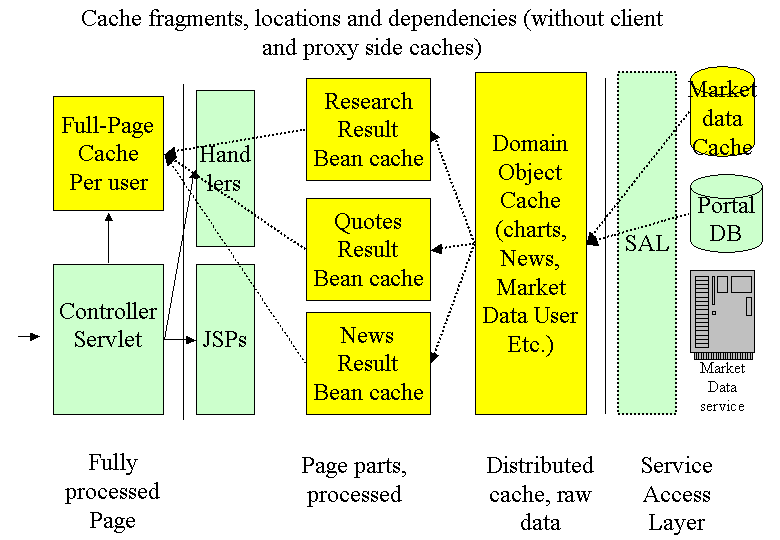

Let’s first draw a diagram of possible caching locations:

An example for full-page caching is taken again from servlets.com:

Server Caching is Better

The problem with this use of getLastModified() is that the cache lives on the client side, so the performance gain only occurs in the relatively rare case where a client hits Reload repeatedly. What we really want is a server-side cache so that a servlet's output can be saved and sent from cache to different clients as long as the servlet's getLastModified() method says the output hasn't changed.

The existing code for a full page server side cache from Oreilly could easily be extended to support caching of personalized pages. Page descriptions elements should get an additional qualifier to allow this kind of caching.

For a discussion of cache size see below (How Much?)

The full page caching approach suffers from a number of restrictions: While solving the re-load problem (caused by quick navigation or browser re-sizing) it forces us to keep a separate homepage per user. Also, the cache time will depend on the page part with the shortest aging time: we can’t store the homepage for a longer period of time. 30 seconds seems to be the limit. And: if many users do not change their settings or only a few, we keep many duplicates in those homepages.

These problems could be solved by using a partial caching strategy IN ADDITION or as a replacement for the full page cache.

Partial page caching see: http://www.opensymphony.com/oscache/

Dynamic content must often be executed in some form each request, but sometimes that content doesn't change every request. Caching the whole page does not help because parts of the page change every request. OSCache solves this problem by providing a means to cache sections of JSP pages.

Error Tolerance - If one error occurs somewhere on your dynamic page, chances are the whole page will be returned as an error, even if 95% of the page executed correctly. OSCache solves this problem by allowing you to serve the cached content in the event of an error, and then reporting the error appropriately.

Currently we have a problem providing partial caching: We don’t have the infrastructure to support it properly. Within AEPortal for every request a handler needs to run. This handler allocates resources and creates the result-beans (models). These models are not cacheable (they store references to request etc.). The homepage handler could be tweaked to supply cached model objects without running the respective handlers but this would be a kludge.

If we had this functionality we could assemble homepages from standard parts (not changed by personalization) and personalized parts that cannot be cached at all or a longer time. The standard and non-personalized parts would be updated asynchronously by the cache ( using the aging descriptions).

Again, if the 80% rule (yahoo) is correct, this approach would increase throughput enormously. In a first step we would probably cache only the non-personalized parts.

The Domain Object Cache already exists in AEPortal. It is currently used for pictures (charts), profiles and External Data SystemUser: a mixture of personalized and global data. This cache should actually be a distributed one (see below: cloning)

Last but not least the diagram shows a special cache DB for MADIS on the right side. Here we could cache and or automatically replicate frequently used MADIS data (or data from other slow or unreliable external services)

Results from Olympic game sites (Nagano etc.) indicate that navigation design has a major impact on site performance. The 98’ Nagano site had a fairly crowded homepage compared to previous sites. This was to avoid useless intermediate page requests and deep navigation paths (see Resources 6).

While the AEPortal homepage is already the place for most of the users interests the page structure could be improved in various ways according to Andy Binggeli. Some of his ideas are:

Separate the personal parts from default/standard parts. This is especially important for the welcome message. If the welcome message is the only personalized part in an otherwise unchanged homepage we could past the unchanged part easily from a cache.

Separate the quick database services from slow external access services. Flush every page part as soon as possible

The proposed changes would NOT require us to use frames. But we would have to give up the single large table layout approach and possibly create some horizontally layered tables.

A full-page cache that holds every page for every user for a whole session could become very large and pose a performance and stability problem for the Java VM.

Some quick guestimates:

A homepage has an average size of 30 kb. Let’s assume 500 concurrent sessions per VM. Just caching the homepage would cost us 15 Megabyte.

The partial caching of non-personalized homepage parts doesn’t cost a thing. For the personalized parts we would access the domain layer and not the cache.

Domain object cache: This cache holds currently pictures for the charts service (global), profile information (per user) etc. The size of this cache is hard to estimate.

Currently we do not know how big our cache can become on a very busy clone. Critical objects are images and other large entities. How do we prevent the cache from eating up all the memory? Should we store e.g. the charts images in the file system?

Domain Object Cache:

Creating a new object cache is simple: A new factory class needs to be created by deriving from the PrefetchCacheFactory and new requester class needs to derive from a request base class.

To allocate a resource from the cache a client either calls PrefetchCache.prefetch() or PrefetchCache.fetch().

Prefetch is intended for requesting the resource asynchronously (i.e. the client does not wait for the resource to be available in the cache). Fetch will – using the clients own thread – go out and get the requested resource synchronously. The client might block in that case.

Both methods will first do a lookup in the cache and check if the resource is already there. And both methods will put a new resource into the cache.

A quality of service interface allows clients to specify e.g. what should happen in case of a null reference being returned from the requester object (should it go into the cache? This could mean that subsequent requests will always retrieve the null reference from the cache instead of creating a new request that might return successfully)

Reference Data caching:

The package comaepinfrastructure.refdata contains the basic infrastructure for reference data handling. A reference data manager (initialized during boot) reads a XML configuration file that describes what data need to be cached and also defines the QOS (aging, reload etc.). A new reference data class can easily be created (probably in comaepAEPortal.refdata package) and a new definition added to RefdataConfigFile.xml

Note: There used to be different RefdataConfigFiles for production, test and development, due to the long load times initially. This has been fixed and there is no longer a real reason for separate configurations. Basically everything is loaded during boot.

We need to clean up the configuration files!

If you need more information on reference data – go and bugger Ralf and Dmitri!

An important part of a caching infrastructure is the quality of service it can provide to different types of data. Some data are only allowed a certain amount of time in the cache. Others should not be cached at all . Some should be pre-loaded, some can use lazy load techniques. Aging can be by relative or absolute time. The currently available QOS for reference data caching are described in the RefData dtd.

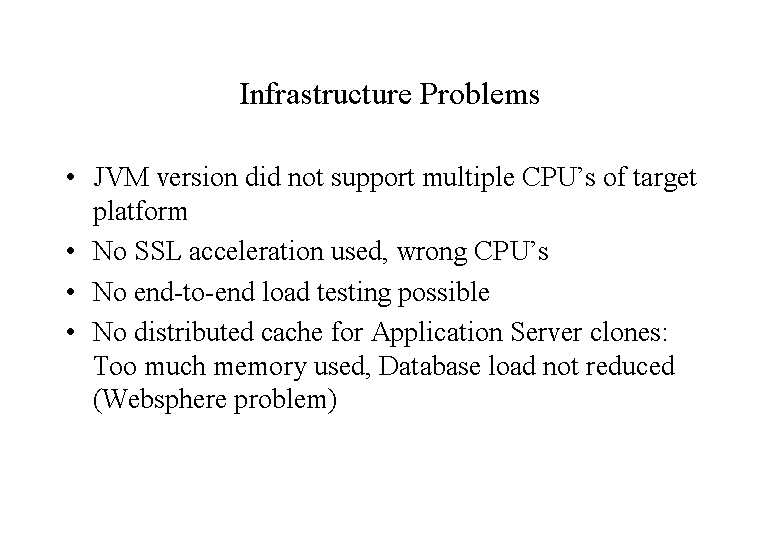

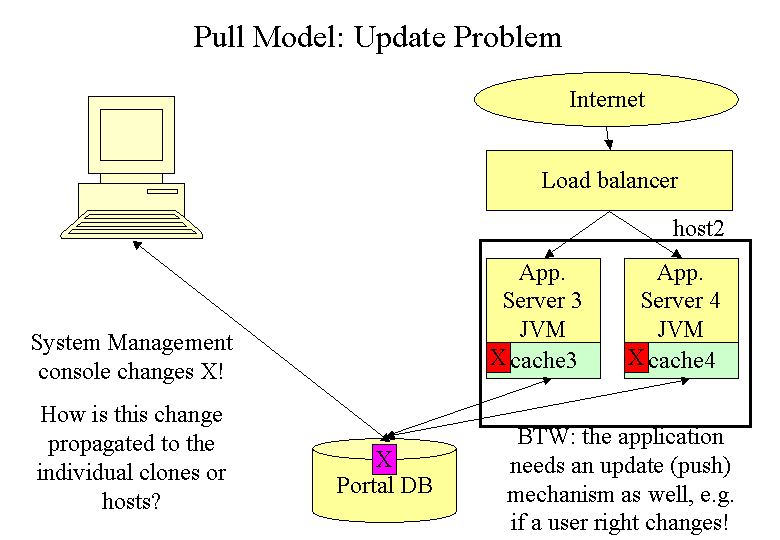

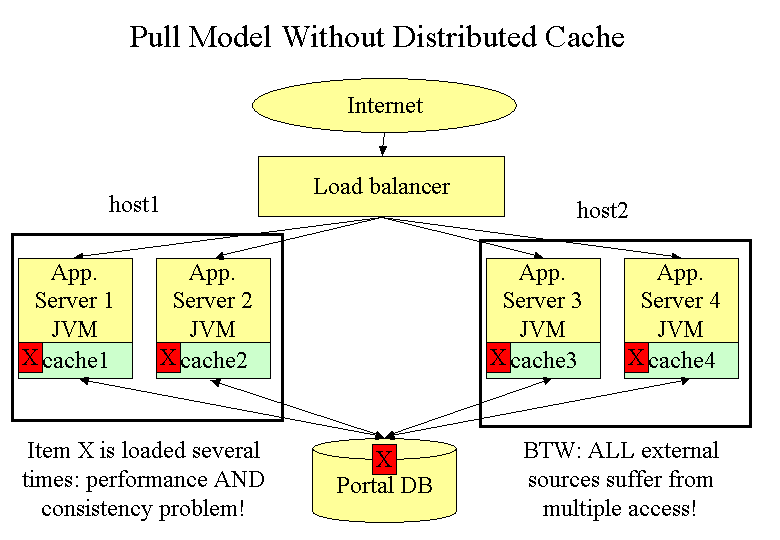

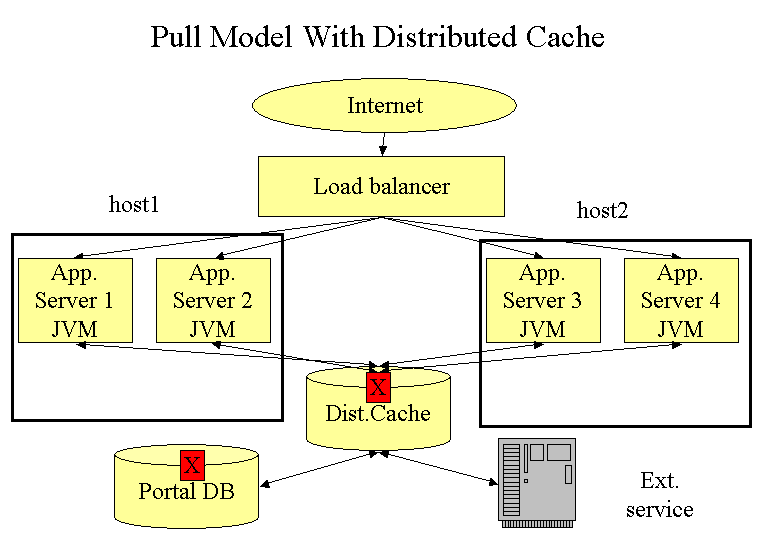

The current cache solution has three problems:

All of them are related to the peculiarities of the current physical architecture, especially the existence of several clones per machine and the lack of session binding per clone.

Note: Websphere 3.2.2 provides session affinity per clone

In effect this means that a session can use two or more clones on one machine. Since there is a cache per application or clone this in turn means that while one clone might have already cached a certain data – if the next request goes to a different clone on the same machine, its cache again has to load the requested data. Worst case, if we have n clones on a machine we can end up with loading the same data n times onto this machine. This fights the purpose of caching and puts unnecessary loads on network and database.

Besides being a performance issue this raises a much bigger problem: What happens with data that are not read-only? Unavoidable we will end up with the same data having different values in different caches. Currently we can only do two things about it:

Note: the 80% have not been tested yet!

User access tokens and profile entries are the most likely candidates to cause problems here.

Cache maintenance is impossible too because we have no way to contact the individual clones. If we could we could as well synchronize the caches in case of changes....

This has already hit us once: In case of a minor database change which requires the database to be shut down and restarted there is a chance that id’s have changed. Without recycling the clones they will have still the old values in the cache.

If we give up the idea of session affinity to ONE node – e.g. if we want to achieve a higher level of fail-over, then we have the same problem between ALL nodes!

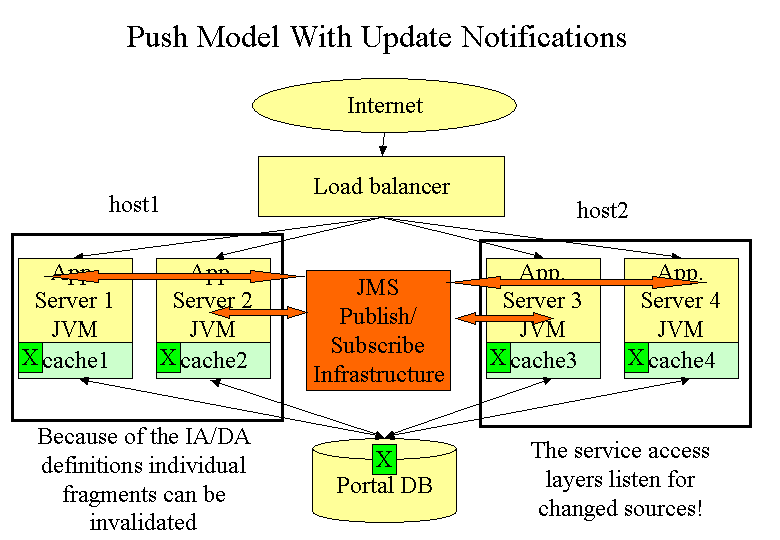

Inter-clone communication is a very important topic for the re-design. Websphere needs to provide a mechanism here or cloning does not make sense in the longer run.

In this case a change to the database would be sprayed to all clones – possibly using a topic based publish/subscribe system and the clones would then update the data.

The Domain object cache on each clone or application instance would then need to subscribe for each cache entry to get notification of changes.

On top of solving the cache synchronization problem this would also give us a means to inform running application instances about all kinds of changes (configuration changes, new software, database updates etc.)

CARP (Cache Array Routing Protocol) could be used to connect the individual caches of all clones.

"CARP is a hashing mechanism which allows a cache to be added or removed from a cache array without relocating more than a single cache’s share of objects. [..] CARP calculates a hash not only for the keys referencing objects (e.g. URL’s) but also for the address of each cache. It then combines key hash values with each address hash value using bitwise XOR (exclusive OR). The primary owner for an object is the one resulting in the highest combined hash score." (15)

This solution would only provide a means to synchronize (actually, to avoid the synchronization problem) several caches.

So far we have learned the following deficiencies of our model 2 architecture:

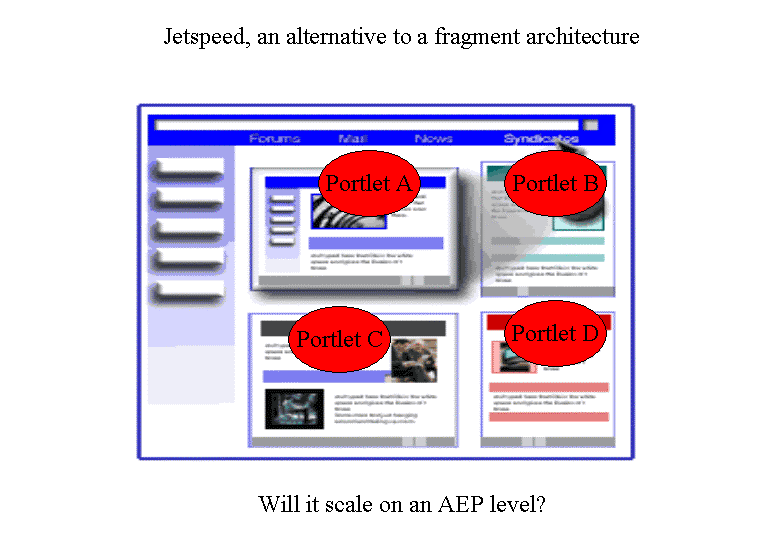

"Portlets" were designed to better represent the aggregation of information within a portal page. The next chapter shows that "Portlets" are still somehow vague – are they applications or information? What kind of user experience and degree of information integration do they provide? What are the essential differences to our handler based approach? And finally: are portlets enough?

At first glance portlets seem to represent pretty much the same "boxed" portal page concept as our handlers above. An independent piece of screen real-estate is represented by an individual portlet.

The following text from IBM gives (a lot) more definitions. I have highlighted the most important bits: http://www-4.ibm.com/software/webservers/portal/portlet.html.

What is a Portlet?

"Portlets are the visible active components end users see within their portal pages. Similar to a window in a PC desktop, each portlet owns a portion of the browser or PDA screen where it displays results. Portlets can be as simple as your email or as complex as a sales forecast from a CRM application.

From a user's view, a portlet is a content channel or application to which a user subscribes, adds to their personal portal page, and configures to show personalized content.

From a content provider's view, a portlet is a means to make available their content

From a portal administrator's view, a portlet is a content container that can be registered with the portal, so that users may subscribe to it.

From a portal's point of view, a portlet is a component rendered into one of its pages.

From a technical point of view, a portlet is a piece of code that runs on a portal server and provides content to be embedded into portal pages. In the simplest terms, a portlet is a Java servlet that operates inside a portal.

Portlets are often small portions of applications. However, portlets do not replace an application's numerous displays and transactions. Instead, a portlet is often used for occasional access to application data or for high profile information that needs to be displayed next to crucial information from other applications. In some cases, this is like an executive information system or a balanced scorecard key performance indicators display. In other cases, it may simply be a productivity enhancement where a user can have all the tools and information needed to quickly access multiple applications, documents, and results. For example, a procurement analyst may want to see online vendor product catalogs with prices side by side with current inventory levels from the ERP system, and this next to a business intelligence analysis of item usage for the last 18 months. In any case, a portlet provides a real time display of vital information based on the users preferences.

Portlets can be built by the Information technology department, systems integrators, independent software vendors, and of course, IBM. Once a portlet is developed and tested, it is stored into the portlet catalog. By browsing the portlet catalog, an end user can then select a portlet and place it into one of their own portal pages."

To summarize, a portlet can be:

The definition mixes the results of processing (content) with the means of processing (code, application, container). The difference is quite important: I cannot cache the code but I can cache results!

The main differences to the handler approach:

Portlets seem to be the proper mechanism to create "Windows" or yahoo style portal pages combining independent pieces of information or applications for convenience reasons.

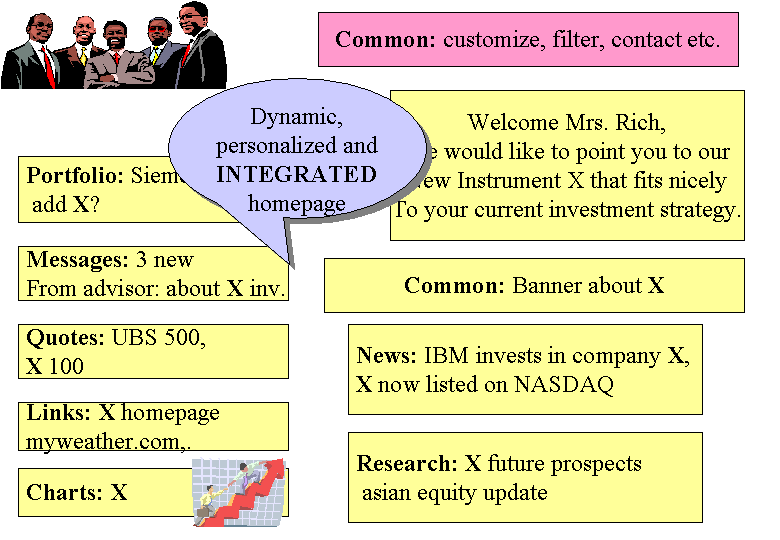

They do NOT provide an infrastructure for sites that want to provide a much higher information integration level – in the sense of combining the information form various sources (applications, data sources etc.) into an integrated information of higher value.

The portlet approach reflects the different information sources behind the enterprise portal, it does NOT integrate them in the sense of integrated and linked CONTENT.

High performance sites would need a separate fragment caching layer for easy and quick assembly of results without going through portlet processing. This layer needs to provide naming and addressing of result fragments.

Fragments are a concept independent of base technology assumptions like J2EE or Apaches Jetspeed etc. Fragments provide an information view on content and stay valid even if base technology changes.

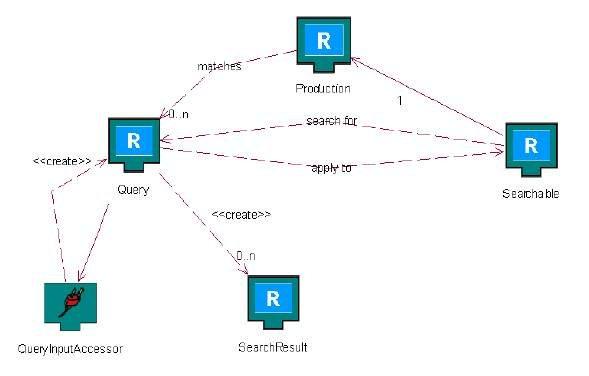

Fragments are pieces of information that have an independent meaning and identity in the user’s conceptual model. This can be a piece of news or research or a single quote.

Fragments can contain other fragments or references to those.

Fragments have names and identities and can be associated (via a catalog) with a system identifier that allows the system to load or store a fragment through a specific service in a specific place.

Fragments can have one or more subjects associated. They can form a dependency chain of fragments. If fragments lower in the chain change, the higher fragments need to be re-validated or updated.

Fragments are the basic unit for caching.

Fragments can be persisted and have a read/write interface.

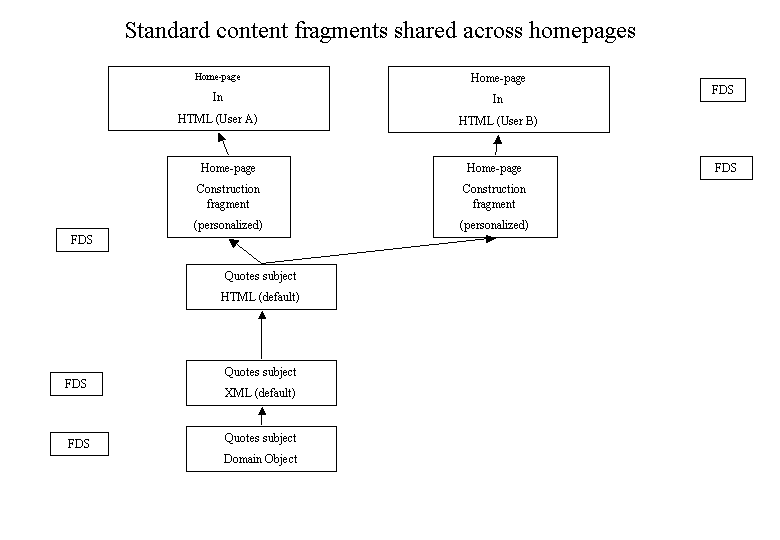

Fragments appear in various formats. An example:

The subject "news service" of the homepage includes several fragments in one fragment chain.

Html XML(pers) XML(common) Domain Object Database Row(s)

The difference between these fragments is that various transformation processes have been applied. A high-speed site needs to store fragments in various formats to avoid repeated and costly transformations. At the same time the site needs to guarantee the consistency of the subject e.g. the news block in the homepage by invalidating all fragments in the chain.

A single page as well as a homepage can be composite fragments. Composite Fragments are described by Fragment Definition Sets FDG (which are fragments as well that define what can/must go into a certain fragment. The composite fragment contains complete sub-fragments or references to other fragments).

The fragment definition sets form an object dependency graph (ODG). This graph is used to invalidate fragments and fragment chains. The real invalidation needs to be performed by going through the object dependency graph formed by the fragment instances themselves.

The lifetime of individual fragments can be very different and is defined either by the FDS or other rules.

Every Fragment can be referenced from various other fragments. As long as a fragment is only referenced, an update of this fragment will immediately become effective. Derived fragments still have to be updated too if they somehow embed the sub-fragments content.

Every Fragment has an associated Validator FV. This object will be contacted if a system component needs to find out if a certain fragment is still valid. If the answer is no, the fragment itself should be invalidated. In addition to this The fragment should know how to update itself – or at least contain all the meta-information or configuration information to make this possible.

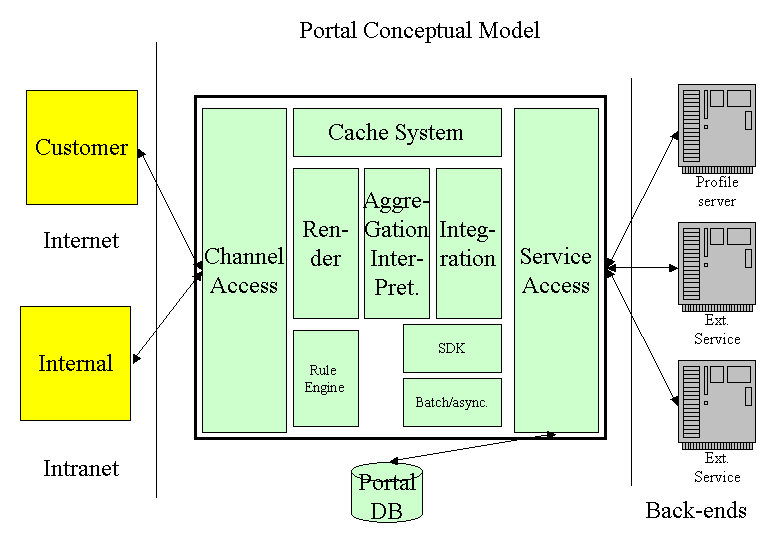

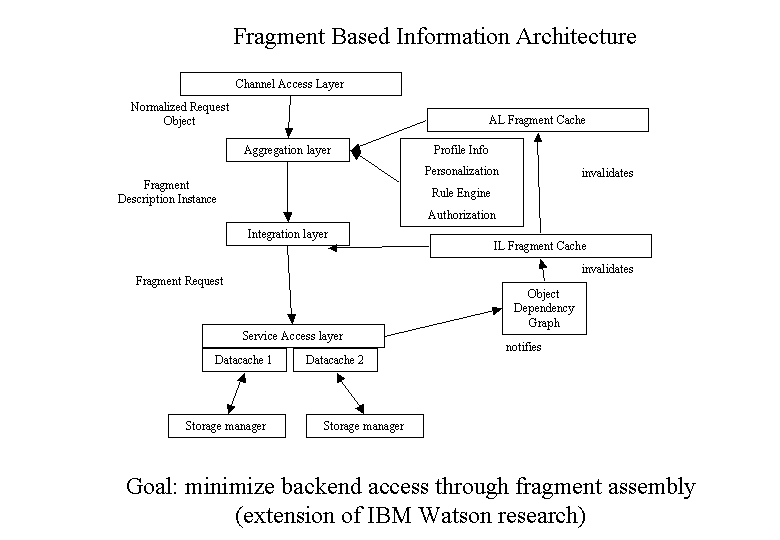

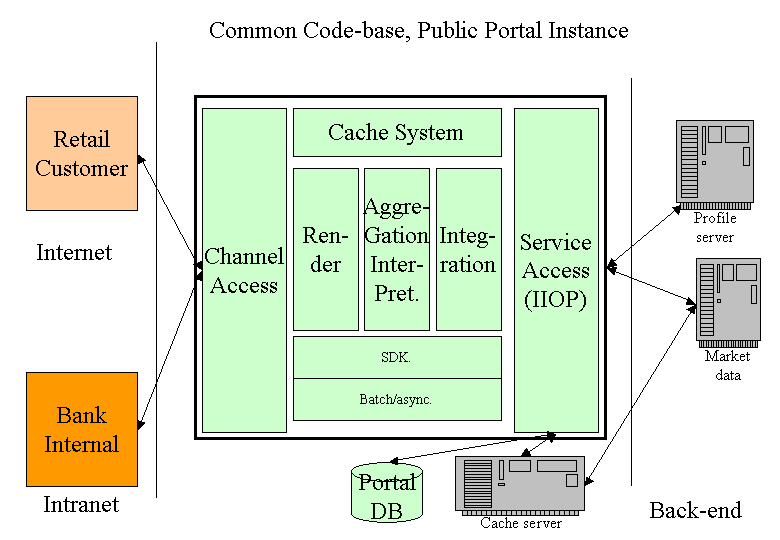

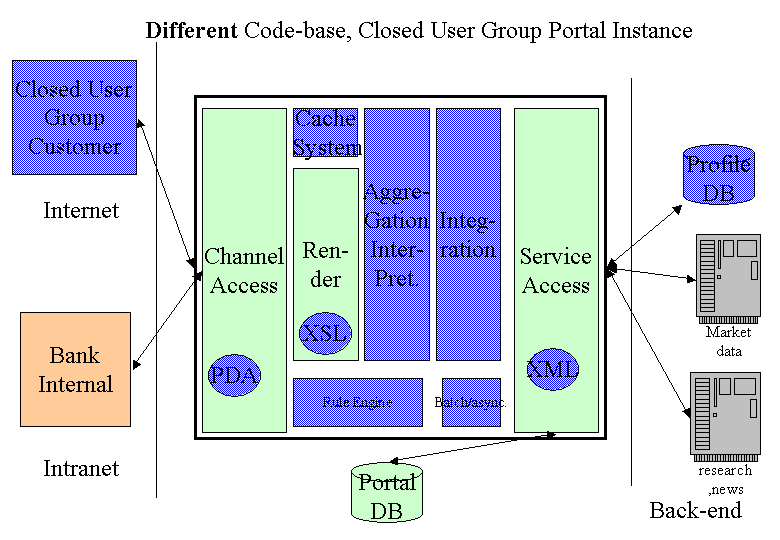

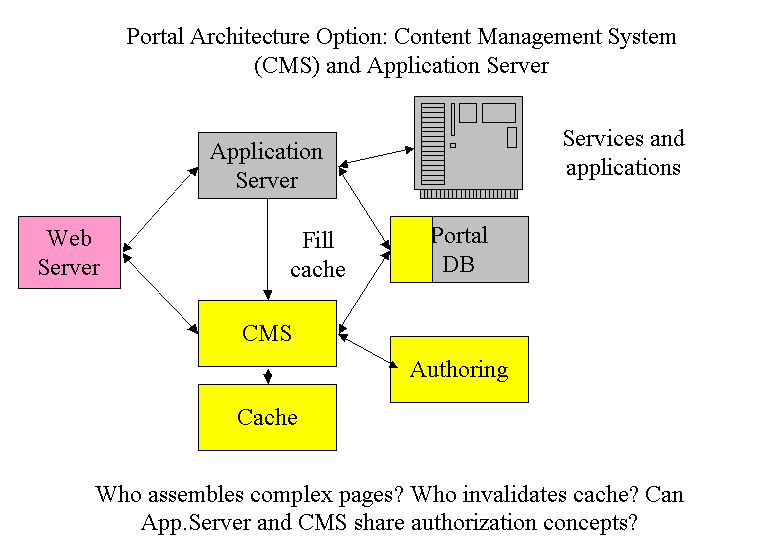

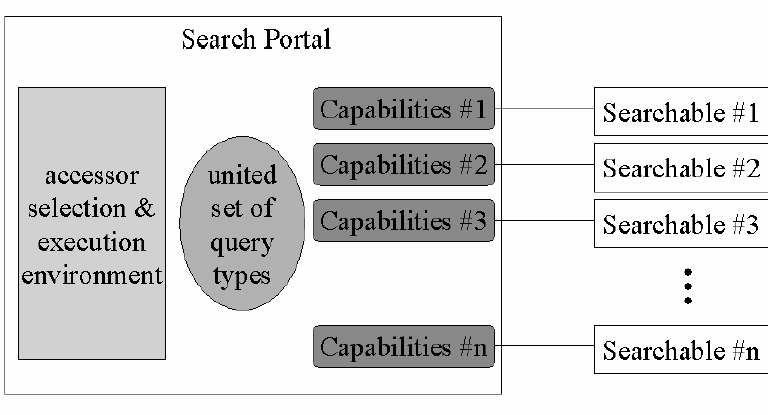

The diagram below gives an overview of the information flow in a portal. The explanation starts as usual with an incoming request but there are certainly asynchronous information gathering processes active at the same time (read-ahead etc.)

All incoming requests from various channel go through the channel access layer (CAL). This layer does a normalization of the requests by creating a platform independent request/response object pair.

The request object enters the aggregation layer (AL). It is the responsibility of this layer to map the request to a certain fragment (a page or parts of it).

The first responsibility of the AL layer is to create a validator for the requested fragment. Using this validator the layer can question cached information (e.g. a full-page rendered information cache) whether the fragment is cached and still valid. If the fragment is still valid, AL layer returns immediately.

Alternative: We could use Data Update Propagation (DUP) to notify caches about invalidation and avoid the validator concept at this level. Validators would then only be necessary if something changes and the scope of associated changes needs to be determined.

In case no suitable fragment was cached, a fragment definition instance for this user is then either created (contact the profile information) or retrieved from a cache (with write through) The fragment definition instance contains the fragment type information, filtered by the personal settings and authorization information:

User X requests fragment "homepage". The type definition for the fragment homepage defines that an instance of a homepage fragment can contain fragments of type "quotes" and "news". The access control subsystem and the user profile contains the information that both fragments are active (not minimized) and the "news" fragment has been personalized (topics, rows) while the quotes fragment is the default quotes information (no personal selections, no GUI changes like more rows etc.)

Please note: if the fragment definition instance has been cached too, then the aggregation layer saves the work to create a user specific fragment request!

Best-case scenario is of course if the fragments all exist in the cache and are valid. (Do they even exist if they are not valid?)

Please note: system information from catalogs map fragments to services. The fragment itself does not contain that information. Aggregation and Integration only deal with fragments, not with services (that will probably use something like a handler to retrieve fragments)

AEPortal uses a pooling infrastructure that allows various QOS, e.g. aging by time or request count. This infrastructure should be used for all pooling needs.

Note: Pooling is not the same as caching because cached objects need a unique name or id so that clients can address them. This is not necessary for pooling. Still, both could probably be implemented using the same base classes. This would be a topic for the re-design.

AEPortal uses a generic object pool called "Minerva". Minerva is now part of the jboss open source project (http://javatree.web.cern.ch/javatree) and does also advanced JDBC connection pooling (JDBC SE, 2 phase commit support) but we only use the generic object pool. It is in the package org.jboss.Minerva.pools. Infrastructure now has a package comaepinfrastructure.pooling which includes standard factories and a main pool factory. This package assumes right now a couple of default pools, e.g. DOMParserPool but new pools can be created programmatically. The process is similar to the creation of new caches: You need a new factory with a method to create a specific object and – optionally – have that object implement the PooledObject interface.

What is missing:

a xml description of default pools so that the system can configure the pool factory at boot time (like we do with reference data)

a way to do the same at runtime from within applications or services (to make AEPortal dynamically extensible)

more quality of service features: aging by time and request.

Documentation: twiki, search for "ObjectPool".

We pool things because we want to save either time – if it takes a lot of time to build an object – or memory – if an object has a large footprint.

The "what" part is harder to answer: Typically heavyweight objects that are not re-entrant because they keep some state e.g. XML Parsers. Right now it is necessary to give two threads two XML Parser instances because they might each install a different entity manager etc. There is only one requirement: the pooled objects need to be "resettable", i.e. a new client thread (or the pool itself when the object is returned) can return the object to its initial state.

Typically objects that get pooled are:

Unlike caching pooling follows an allocate/free pattern. Objects that are not "freed" after use are no longer available for other threads.

Sometimes it is hard to retrofit a piece of code with pooling because the moment where the pooled resource needs to be returned to the pool cannot be determined.

Example 5.2. A pooling example:

// before pooling

Class ResourceUser {

Public ResourceUser () {

Resource mResource = new Resource(); // get a new instance

mResource.setSomeMode(x); // initialize it

}

Public useResource() {

String result = mResource.parse(foo);

}

}

Given this class, when would you return a pooled resource to the pool?

// with pooling

Class ResourceUser {

Public ResouceUser () {

}

private Resource getResource() {

Resource mResouce = (Resource) pool.getResource(); // get a pooled instance

mResource.setSomeMode(x); // initialize it

}

private void freeResource(Resource res) {

pool.releaseObject(res);

}

Public useResource() {

Resource res = getResource(); // get resource

String result = res.parse(foo); // use it

FreeResource(res); // return it immediately

}

}

Note

You can no longer let objects of class PoolUser be collected by the Garbage Collector without returning the pooled resource beforehand. The code is designed for short time usage of a resource. In the non-pooled version the resource is private to class PoolUser. This is expensive but the good side of it is that this cannot create a bottleneck. In the pooled version – if class PoolUser does not call freeResource() after every use – we have created a bottleneck in the system. Of course, if class PoolUser would use the resource in a tight and non-blocking loop, it would probably hold on to the one and avoid the pool management overhead.

GUI design has both an subjective and objective impact on the speed of a portal. A subjective result of GUI design is e.g. how the user experiences the load times for the homepage.

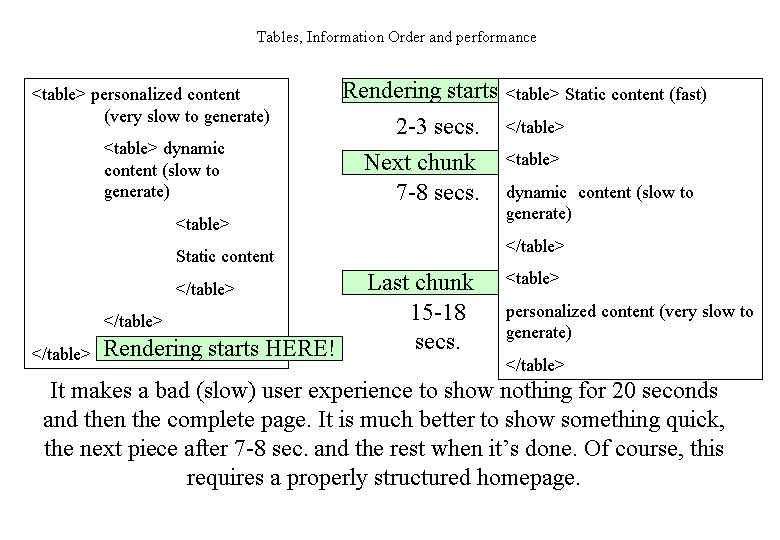

As we have seen the homepage processing is fairly intensive and will take a while. This leads to a wait time of up to 20 seconds for a user - a number that is usually not acceptable according to the usability literature (which talks about the magic 8 seconds after which a user leaves the page). While this may not be true in case of enterprise portal - because users save a lot of time due to high service and information integration (Single-Sign-On, aggregated reports etc.) the GUI design can nevertheless reduce the waiting time subjectively. The trick can be seen e.g. at sites like www.excite.com and works like that:

To use this effect the GUI design can also have an objective impact on load time by ordering the content in a way optimized for incremental load.

Gui Information ordering

In most cases this means to design the GUI to display static content on top of the page, then to put dynamic but non-personalized content in the middle and reserve the bottom space (the "longest" area) for dynamic and personalized information which takes presumably the longest to collect on the server side.

What prevents incremental loading technically is the use of one big table that embraces all the homepage content. While this is nice from a layout point of view it prevents most browsers from rendering those parts of the homepage that have been received already. Instead - the browser waits for the closing table element (which is the end of the homepage html stream and can be around 50Kb later) to start rendering.

Much of this document has already been dealing with performance and throughput issues. Here I would like to collect some more ideas from the AEPortal team on throughput or performance improvements

Certain Java styles will have a very strong negative impact on system throughput:

In the end it was necessary to walk through Jack Shirazis book on Java performance to fix the worst problems. It turned out that e.g. throwing and catching an exception is worth a couple of hundred lines of Java code or up to 400 ms.

DO NOT USE EXCEPTIONS IN PERFORMANCE CRITICAL SECTIONS – EVEN IF IT IS YOUR "STYLE"!

Excessive object creation and garbage collection can only be avoided using advanced caching and pooling strategies and last but not least interface designs which avoid useless copy’s – this goes deep into architecture.

The old saying: first get it going and optimize afterwards DOES NOT WORK HERE!

The latest garbage collectors work generational: they run through older data less frequently. This is very good for large caches which would otherwise cause a lot of GC activity.

Excessive synchronization is very common. To prevent the framework classes in the critical performance path are either singletons or have static methods.